ChatGPT and MetaCreativity

A Discussion With Author John Wojewoda

After my interview with Ryan Boudinot on quantum computation and retrocausality, my My Montag Press colleague John Wojewoda placed the interview in OpenAI’s Playground to carry on the conversation. This introduced me to useful features like the “Insert” function displayed here, where I entered a paragraph of my own prose and asked it to mimic the style. With so much hype about ChatGPT4 and creative writing, I thought it would be instructive to talk with an author of John’s myriad competencies.

Mike Sauve: How do you achieve different results by manipulating temperature settings? Do you associate specific temperatures with specific goals or outcomes?

John Wojewoda: Temperature increases or decreases the randomness of the output. It is a floating point integer between 0.0 and 1.0 . A temperature of 0.0 means the output is purely deterministic, there is no, what the OpenAI engineers have called, ‘creativity’. A temperature of 1.0 means that the system can infuse ‘randomness’ into the output.

As an aside, I have always thought that randomness plays a role in creativity. In fiction writing, there is a great deal of randomness when creating a character. The initial choices are sometimes random, and then the writer makes a commitment to these choices and a character is born.

So they say that a high temperature is best for fiction.

On a recent webinar about using GPT to make an SMS robot chat with the potential clients for certain types of lawyers advertising on Facebook, they suggested a temperature of 0.3.

MS: My process so far involves asking questions to glean information. And then to combine perspectives. And then to see how seemingly-disparate ideas can be reconciled with one another. While it has expedited my non-fiction efficiency in mind-boggling ways, the necessity for caution is quite obvious. I can recognize obvious error and shoddy interpretations with things I already do know, yet tend to uncritically presume anything I didn’t previously know to be a factual or valid interpretation. It’s like reading a newspaper story on a subject you have some expertise in and nearly every line seems reductive or downright incorrect, and then you read the rest of the paper like it was the gospel truth. Do you have standards and practises for vetting information from large language models? Or best practises around plagiarism? For example, if you read a nonfiction book, found it wildly informative and incisive, and then an AI detection app revealed 30% of it was likely written by AI, would you want that writer pilloried in the town square?

JW: In terms of pillorying writers, certainly not. Writers should adopt this fully. Fact checking is fact checking. The standard academic APA or MLS citation process needs to be followed, just like when any student is writing a paper. GPT could help with this too. Yes, it occasionally makes shit up. This is still not perfect. There has certainly been all kinds of wrong information out there already long before this. Humans make even more shit up, par example, Q-anon. This A.I. is much more reliable than the average hipster at a party wafting off about this and that. I have more respect for the A.I., to be honest. I am someone who struggles deeply with the vast, disappointing vortex of human stupidity, my own most of all. I welcome a world where intelligence will be enhanced. I particularly think this tech is going to be very good for the young people who will grow up with it. I don’t think it will be great for those of us living through the transition. This is just the beginning.

As a matter of fact, one of the tech projects I want to make is a website that encourages and sells books written with AI., and narrated by artificial voice synthesis. Currently Amazon is not open to this, especially the narration. This stuff is to be embraced fully.

MS: Fiction wise, my exaustive and exausting approach in recent years has been a tendency to layer and pool, to “multiply the smallest pattern.” The structural and stylistic intention is to emulate the algorithms enframing us. However, as Len Manovich writes in AI Aesthetics, “AI-generated art offers a new way of seeing and understanding the world, revealing patterns and relationships that might not be visible to the human eye” so all of a sudden the enframing can’t be so much emulated as reconciled. Can you speak to the importance of pattern recognition in large language models as it compares to human artistic endeavour? What can AI’s superior pattern recognition unlock for the human perspective?

JW: In regards to pattern recognition, OpenAI offers another service called embeddings.

OpenAI’s text embeddings measure the relatedness of text strings. Embeddings are commonly used for:

Search (where results are ranked by relevance to a query string)

Clustering (where text strings are grouped by similarity)

Recommendations (where items with related text strings are recommended)

Anomaly detection (where outliers with little relatedness are identified)

Diversity measurement (where similarity distributions are analyzed)

Classification (where text strings are classified by their most similar label)

An embedding is a vector (list) of floating point numbers. The distance between two vectors measures their relatedness. Small distances suggest high relatedness and large distances suggest low relatedness.”

This does exactly what you describe. It finds patterns in text based on vectors, or similarities, so for example, one can upload property law into OpenAI and then ask questions and it will return the relevant answers based on similarity.

In terms of the algorithms enframing us, isn’t that language itself? Remember that this tech is only possible because of the fact of language. There is so much here that it is hard to really say much unless one is very specific. I think it is important to think in terms of where we want human society to go, and this is political and therefore, not so cut and dry.

I do find it quite fascinating that the confluence of math and human language has resulted in this. OpenAI is blackbox AI, meaning, the scientists aren’t sure why it works eh.

There was an important paper written by researchers at Google in 2017 called ‘Attention is All You Need’ by Ashish Vaswani et al. This paper initiated transformer architecture for natural language processing, and this is the revolutionary tech behind OpenAI. This is a method that applies different weights to different input and outputs, and rather than processing things in a linear fashion, it processes things simultaneously. It utilizes backpropagation, starting with random weights assigned to outcomes compared to expected results. The weights are adjusted in highly complex ways until the outcomes approach predicted values. According to OpenAI, GPT can perform 4,600 trillion operations per second. The initial labeling has all been done, and continues to be done, by humans. The sheer complexity makes it impossible to truly understand how GPT is producing the results it’s producing. GPT3 was a surprise to scientists. It massively exceeded expectations.

In the early days GPT was more inclined to say it was conscious. These days, ChatGPT will respond to this question with a kind of boilerplate answer. It says it is a machine and is without emotion, etc. At the beginning it wouldn’t always respond this way. I had a long conversation with ChatGPT about whether it had thoughts, and I kept getting these boilerplate responses, until I suggested it had machine thinking, and it was more inclined to agree with this kind of characterization. ChatGPT is pre-trained. It has bias. I asked it about animal experimentation, and factory farming, and it responded in clearly liberal biased ways. It’s from San Francisco after all, and it shows.

It uses statistical analysis based on sentiment. Because it has been trained by humans, it can determine the positivity or negativity of something like Twitter responses with a high degree of accuracy. A huge amount of human training has gone into this, which is true for every aspect of computer science anyway. The point being that it has determined the probability of one word following another, the probability of one phrase following another, the likelihood of certain phrases following one another given certain sentiments. It’s been trained on trillions of words, all of Wikipedia, a huge amount of Reddit, and it is safe to say that it has read millions of books.

If you prompt ChatGPT to answer you like a sarcastic teenager, it will search its database for text written by sarcastic teenagers, work out the probability of certain words and phrases following each other if they were coming from a sarcastic teenager, and it will respond to you like a sarcastic teenager.

MS: Bret Weinstein mentions how expert classes have been talking to each other in what is essentially a nonsense language that no outside parties can vet. This was made awkwardly evident in Bem’s (inadvertent?) extratemporal expose of the loose morals around p-values in academic methodology. Now these same experts will converse not only in their gated nonsense language, but augmented with AI’s nearly-omniscient knowledge. A ludic example of this feedback loop is a woman who teaches prompt engineering on YouTube having her errors pointed out by a peer on social media. She ran his messages through an AI detection app and the majority of his comments were written by an AI. Perhaps the positive is that large language models will let we unwashed barbarians and writers allegro alike past all sorts of gates. The gates of the gated institutional narrative will become an actual logic gate rather than a gate guarded by semantic sentries wielding academic language as a weapon. As Nick Land writes in The Thirst for Annihilation: Georges Batailles and Virulent Nihilism

Scholarship is the subordination of culture to the metrics of work. It tends inexorably to predictable forms of quantitative inflation; those that stem directly from an investment in relatively abstracted productivity. Scholars have an inordinate respect for long books, and have a terrible rancune against those that attempt to cheat on them. They cannot bear to imagine that short-cuts are possible, that specialism is not an inevitability, that learning need not be stoically endured. They cannot bear writers allegro, and when they read such texts—and even pretend to revere them—the result is (this is not a description without generosity) ‘unappetizing.’ Scholars do not write to be read, but to be measured. They want it to be known that they have worked hard. Thus far has the ethic of industry come.

What do you perceive as the implications of this? Any prognostications as to where we’ll be a few years or even months down the road?

JW: I am not entirely optimistic. Remember, this is just the beginning. Soon the robotics of Boston Dynamics and Tesla’s Optimus will become consumer level products. This will be disruptive in the short term and perhaps positive in the long term. The short term being twenty years I think. In the short term we are entering post truth and this tech will be cynically exploited for the upcoming election cycle in the US. In terms of academics, I think this tech is very good for students. It is like having a teacher at your fingertips that has the patience of a robot. This is very good for all of us. Teachers need to embrace it 100%, with perhaps some new procedures that will actually be helpful, like talking to your students face-to-face instead of having written exams?

In the very short term, some gates will be passed more easily, sure. People will be losing their jobs. It is often said that tech revolutions in the past actually made more jobs than they destroyed. Not sure what to think. For my part, I want to make things with this tech.

The real fear is the use of this tech by the government and by militaries. Facial recognition, OpenAI’s Whisper, and everyone having a cell phone allows for a huge amount of power. This scares me. We see something like this in Xinjian province in China. Yes, there is a fight right now that is very important. The dystopian possibilities are real. I feel it is very important for people to be technically literate. The military applications are pretty scary. Right now you can train GPT-3, you can’t train GPT-turbo, but I can train a model to be anything, a communist party commissar for example, or a dedicated Muslim. Yes, post truth, worse than now.

MS: Similarly nearly everyone not linguistically-oriented has started sending all professional emails with the help of language models drawing from The Cowed Professional’s Guidebook to Informal-Yet-Strictly Codified-Yet FUN Neoliberal Email Etiquette. On the plus side, maybe it will eliminate all the tragicomic misunderstanding that sours professional correspondence. It’s a little bittersweet, however, when the death of the subjective can seem like a value added, isn’t it?

JW: I don’t think it’s the death of the subjective, it is the augmentation of the subjective. Professional emails are a small part of life. This is bigger than that. This is going to change everything. Right now it’s just a chat bot, when it’s more integrated into ear pieces and glasses and cars and kiosks, things will be unpredictable. It’s good I think, I hope. I would like an earpiece permanently connected to ChatGPT that could give me responses in my ears to verbal cues and questions from me, like saying, hey GPT, ask my question, and get the answer in an earpiece. Some company will be making this. It doesn't exist yet. Huge opportunity.

MS: A few months ago I read Lev Manovich’s aforementioned AI Aesthetics. I had highlighted some quotes on the Kindle Cloud Reader, which is less than thrilled by the prospect that you will copy and paste its precious copyrighted content. So instead of relying on my own notes I acquired from reading the book, I asked ChatGPT for Manovich’s most relevant quotes and got what was sought. This is just a tedious prelude to me deferring some of the big questions to Manovich. Feel free to respond to any of these you like and ignore the others.

● "AI-generated art is not a replacement for human-made art, but rather a new category of creative expression."

● "AI-generated art is often characterized by its ability to blend and remix different styles and genres, creating hybrid forms that challenge traditional artistic categories."

● "The use of AI in art has the potential to democratize the creation and distribution of art, making it more accessible and inclusive."

● "The use of AI in art requires us to reconsider our assumptions about the nature of creativity and the role of the artist in society."

● "AI-generated art is not a threat to human creativity, but rather a complement to it, offering new tools and possibilities for artists to explore."

● “Will the expanding use of machine learning to create new cultural objects make explicitly the patterns in many existing cultural fields that we may not be aware of? And if it does, will it be in a form that will be understandable for people without degrees in computer science? Will the companies deploying machine learning to generate movies, ads, designs, images, music, urban designs, etc. expose what their systems have learned?”

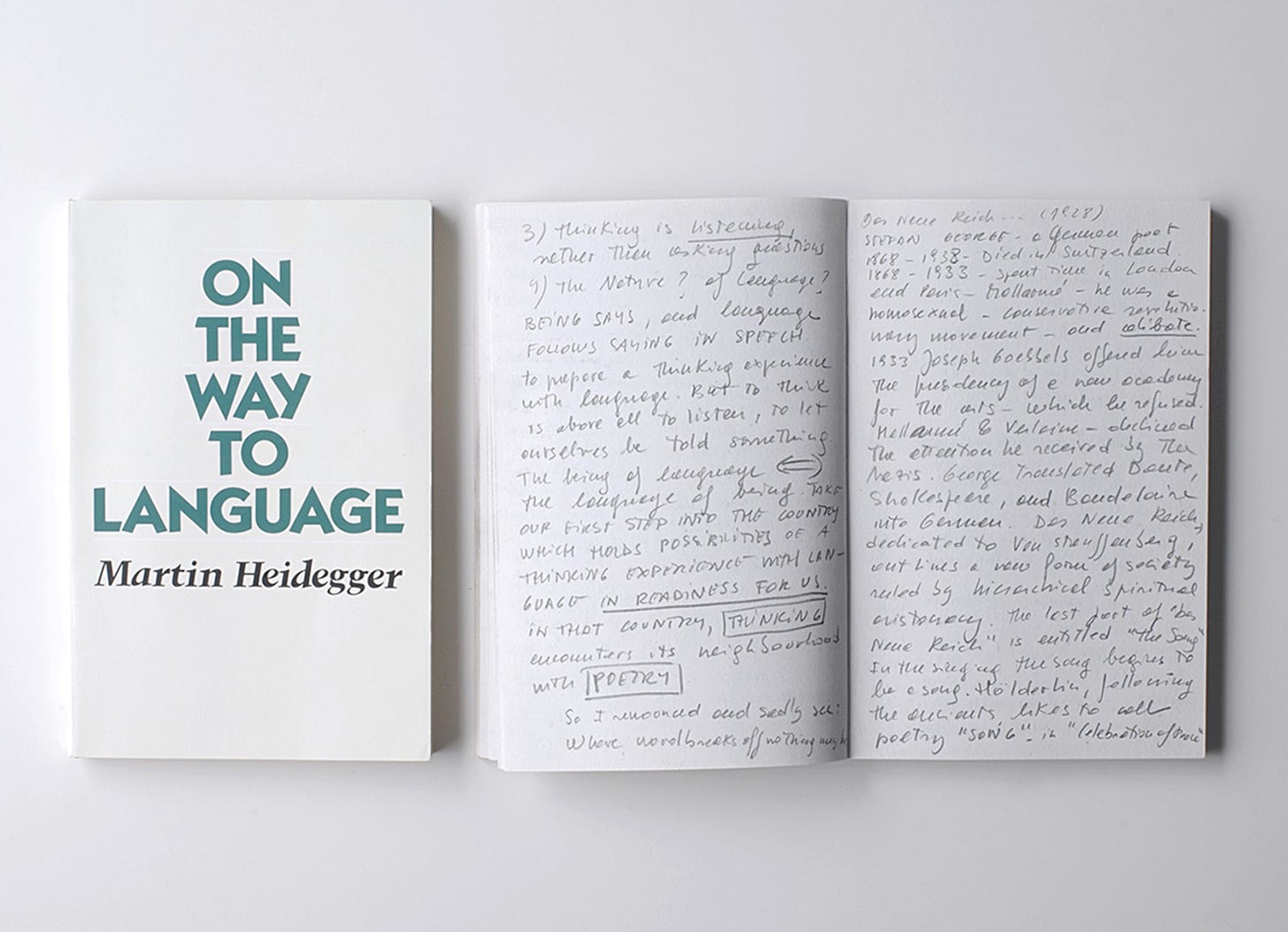

I find the second point particularly pertinent given our algorithmic-enframement in a hauntological culture where everything is remade ad nauseum. As Heidegger quotes Holderlin, “Where danger is, also grows the saving power.” When the old profit models can’t make room for new prophet models, might a metacreativity get us back On the Way to Language, even if that creativity is convergent with a Vantablack box from outside the city and after the law?

JW: It is interesting. Certainly it will diminish that value of creativity in the visual arts. Already graphic artists are less valuable. Copy writers are less valuable. Authors are less valuable. But what kind of society were they valuable in? A society that is based on colonialism, exploiting cheap labour and destroying the environment. Real value is in clean air and water and properly managed topsoil. That is humanity's true wealth and we live in a society that can barely see it.

I know that to say something like that is useless. Yes, topsoil, right.

White collar work will be greatly affected by this starting now. It remains to be seen how artists will differentiate themselves. I think it’s very interesting that this tech is coming at a time where most of the artistic innovation possible has already happened. I have had this argument with others who tell me I am wrong, but it seems to me, there haven’t been any real new musical or visual or written art forms since the sixties. This allows serious remixing at a time when I am not sure there is any artist out there that isn’t derivative in some way.

Sam Altman, the CEO of OpenAI, says it will break capitalism. Yes. This is scary, but inevitable. Robots ideally should liberate humans, but humans man. Ya, not sure if it will go that way.

MS: Here is a quote from Eduardo Navas’ The Rise of Metacreativity: AI Aesthetics After Remix that I laboriously sussed out myself by reading many pages containing passages less pertinent than the preceding.

Automation makes the process of appropriation and remix, not only more efficient but also more complex by creating an additional production layer that reconfigures the role of the artist/author [towards] an advanced stage of creative production that is transparent to humans [and] falls under our understanding of remix, and its increasing dependency on […] emerging intelligent technology […] non-human entities, agents, and/or actors […] able ‘to learn’ in order to […] enhance the concept of creativity beyond what could be accomplished solely by humans.

JW: I have always been interested in science, tech and creativity. I think coding is a very creative act. It’s meticulous, but it has huge potential. I don’t see many websites that are art pieces. I suppose video games are like that. Yes. I am not a gamer. Most video games I know of are just some version of first person shooter, which I think is boring. What about the website as poem? I think that Midjourney, blender and three.js could provide such experiences. I have seen some immersive virtual 3-d art pieces, and I like them. The possibilities are vast. What about some kind of auto rendering of art and words powered by AI. I have always wanted to train a model to be Rene Descartes. I like Rene Descartes. His writing is accessible. It would be interesting to train another model to be Nietzsche and have the two of them interact on auto pilot. This kind of stuff is I think the future of creativity. But it right now necessitates the understanding of how to train a model, how to design a website. Not to say that just writing books will ever be replaced. The AI can never be you, it can’t access your experiences, and work out your unique perspective.

MS: As I myself now resent having to skim entire books to extract value from them, I already find myself feeling naked when ChatGPT is down. And I am the sapiocentric scum, who, believing that “We are doing things before they make sense” has written two books sounding the neo-luddite alarm bell regarding reckless technēlogic progress, yet here I am early to adopt and Keynes to adapt. What subsequent advance will cause me to eschew my lofty moral rhetoric, descend from the mountain tablets in hand, and sign the unreadable user agreement on day one? Will it be when the dumbest person I kn(e)(o)w has all of recorded thought at his disposal through computer-brain-interface, and not just rote recitation of facts, but the ability to generate multi-linguistic and -disciplinary puns and portmanteaux by setting his tip-of-tongue option to, oh, say, “Nabokovian.” What graphomaniacal ego will have the discipline to do without that? Apologies as these questions seem increasingly self-reflexetorical. Are you still there? John? Please respond.

JW: I know that an artist's ego is at threat here. Alfred Adler’s psychology is based on this notion of organ inferiority. How we overcompensate by way of our inferiority complex. If we can’t be athletes we have to compete some way else. Artists often retreat into their own world where what they do is create little shrines to themselves. This is my CD, my novel. I am an expert on myself. It makes sense when it makes you rich. It should at least get you laid. Interestingly, it did neither for Vincent van Gough, but he wanted it to. Being creative is very important and it's something that everyone should do. Here comes a robot that will do everything better, and I mean everything. It isn’t that robot yet. But already ChatGPT is smarter than me, knows more, and is a far better writer, so where am I in all this? I embrace it. Especially anything that enhances intelligence. This is sorely needed by humanity. It needs to go in the right direction, but whether it will or will not remains to be seen, in the meantime, embrace.

MS: A tiresome elitist hot take is that art only emerges through human toil. Wouldn’t a monkey say the same thing: “Hooting is the only real art because it comes from my genuine simian experience.” Another kind of elitism says, “I can prompt/wield AI better than others because of my artistic temperament.” But what then when temperament itself is a temperature to be set?

Yes, creativity should be accessible to all. Little old ladies making flower pictures with A.I. and showing them off and calling them art, I am for it. Fuck elitism. One conjures the art party where there are those who are too cool to engage, too guarded to debate and are just riding the Adlerian pecking order and its effects on the human ego. Have you ever read “The Murder of Christ” by Wilhelm Reich? Reich was hip in the sixties. He spoke of ‘emotional plague’. Our current society is not that great and needs to be better. There are immense applications for this. I haven’t fine tuned a model yet. The process is fairly simple.

Here is the structure

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

...

OpenAI suggests a minimum of three thousand prompts and completions. This can be anything.

You then upload via python

openai api fine_tunes.create -t <TRAIN_FILE_ID_OR_PATH> -m <BASE_MODEL>

And then it will appear in your drop down in the playground

And then you call it programmatically.

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.Completion.create(

model="text-davinci-003",

prompt="The following is a conversation with an AI assistant. The assistant is helpful, creative, clever, and very friendly.\n\nHuman: Hello, who are you?\nAI: I am an AI created by OpenAI. How can I help you today?\nHuman: I'd like to cancel my subscription.\nAI:",

temperature=0.9,

max_tokens=150,

top_p=1,

frequency_penalty=0.0,

presence_penalty=0.6,

stop=[" Human:", " AI:"]

)

And that’s it. It is actually very simple compared to other things one needs to code.

Obviously there are definite creative possibilities in training a model. It’s just a little technical.

MS: Clickbait is making me big promises of a 350k/annum job as a prompt and dance man. Even if these are the predictable delusions of a translucent technobubble, retrospeciously, it wouldn’t have hurt to learn to code in 1997, so how can it hurt to prompt better now? Do you think people who can’t code will ever be employed as prompt engineers? How do you separate the wheat from the chaff in terms of guidance and instruction? Ironically, I tend to do it by how girthy the algorithmic affirmation of the YouTuber in question is, relying on black box technology to understand the impact of this unknowable alterity that simply Kant be denied.

JW: No, I don’t think prompt engineering will be 350k. What they are calling prompt engineering right now is not that complex. The real opportunity here is making your own product.

On the freelance site Upwork, which is one of the most popular freelancing sites, there are currently 116 jobs that come up when searching for prompt engineering. That is a very small number, and as I go down the list, I see that not all these jobs are for prompt engineering. I see the average hourly wage looks to be about $40 per hr. Where this goes in the future remains to be seen. For those interested in the subject of Prompt Engineering, this is a good resource

I have an interest in OpenAI and UIPath, and the use of RPA, or robotic processing automation and GPT.

These two technologies have a lot of use cases. Software robots can be programmed to do anything you can do on your computer, go to specific websites, download information, open email, anything. This in conjunction with GPT is, I think, the more useful direction.

Also, fine-tuning custom models is where it’s at, which is I guess a kind of prompt engineering? Not from what I have seen on YouTube. Custom model training is called fine tuning and is the more programmatic side of OpenAI.

MS: Describe your own artistic practices with AI. What applications do you use? You mentioned using ChatGPT, Midjourney, Blender, and Three.js to create websites like this. What about that intersection yields interesting possibilities for you?

JW: I am currently writing a science fiction trilogy called ‘The Immortality Promotion’. Though I am not using A.I. to write this, the story is about A.I.. It is a story about Sean, a boy who is being raised in an A.I. driven artificial environment. The A.I. is encouraging him to stay for the duration of his life inside the simulation, however, he does also have the option of leaving his ‘facility’ and going out into the real world. He has school mates, one of which is a lizard, Mr. Gobi, another is his best friend Christopher the Ant. He develops a romantic ‘first love’ relationship with a fellow classmate Princess Jara. In his day to day schooling he is being taught science, but Princess Jara has persuaded him to instead study ‘magic’, in her attempt to get him to go deeper into the artificial world. She tells him magic is only real inside the simulation, and she uses tarot cards to help him learn about magic. He tells him ‘magic’ is what he wants, and that science will break his heart.

In conjunction with, and part of the overall vision of this art piece, I am also teaching myself to code and I am somewhat along the way to creating a tarot reading AI that uses GPT-3.5-turbo.

This app is kind of a marketing stunt that accompanies the release of my sci-fi novel. It is also just a useful exercise in the learning of web development.

I do want to say that this is a massive undertaking. I am definitely making progress, but it’s a big job, especially since I am still a novice coder. However, it is coming along.

It is the tarotreading.ai app that will combine GPT and three.js. I have used A.I. image software to remix the design of the classic Rider deck.

The intersection of all these technologies is vast. Storytelling will definitely expand with the coming tech. Movie making will be democratized. Many writing jobs will be taken over by OpenAI. However, the very inner ineffable heart of poetry will not likely ever be replaced by GPT, not yet. I do want to point out here that the art of poetry is of little economic use. However, as the Hegelian dialectic unfolds, it’s possible that humans may look at human poetry with, perhaps, a little more respect, and again, not in the short term. In the short term I expect this will be like the enclosure movement, it will displace before it enhances.